Abstract

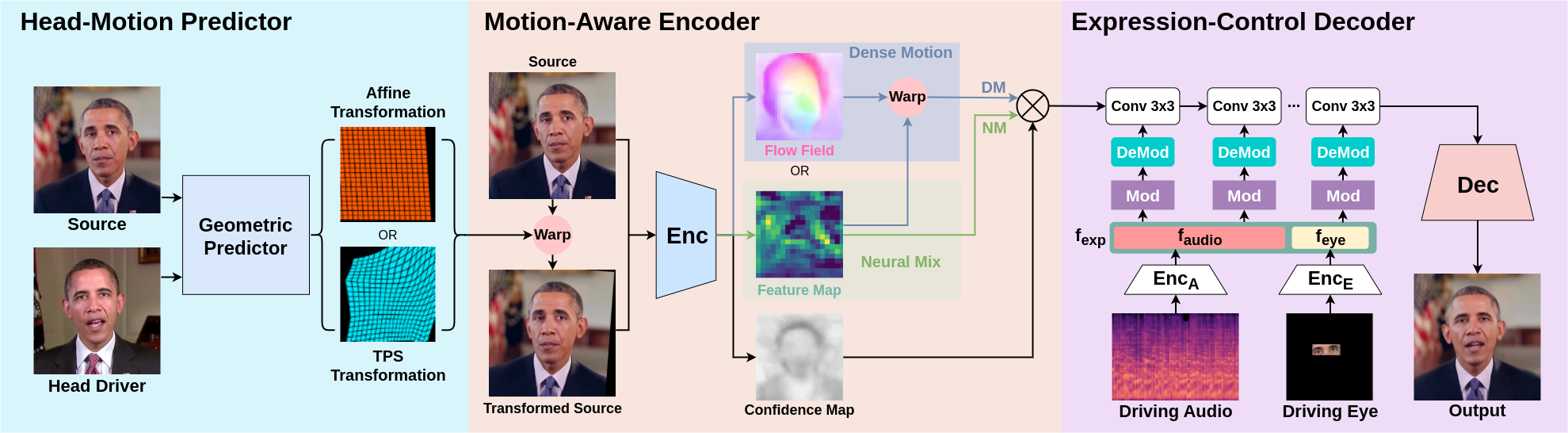

For realistic talking head generation, creating natural head motion while maintaining accurate lip synchronization is essential. To fulfill this challenging task, we propose DisCoHead, a novel method to disentangle and control head pose and facial expressions without supervision. DisCoHead uses a single geometric transformation as a bottleneck to isolate and extract head motion from a head-driving video. Either an affine or a thin-plate spline transformation can be used and both work well as geometric bottlenecks. We enhance the efficiency of DisCoHead by integrating a dense motion estimator and the encoder of a generator which are originally separate modules. Taking a step further, we also propose a neural mix approach where dense motion is estimated and applied implicitly by the encoder. After applying the disentangled head motion to a source identity, DisCoHead controls the mouth region according to speech audio, and it blinks eyes and moves eyebrows following a separate driving video of the eye region, via the weight modulation of convolutional neural networks. The experiments using multiple datasets show that DisCoHead successfully generates realistic audio-and-video-driven talking heads and outperforms state-of-the-art methods. Project page: https://deepbrainai-research.github.io/discohead

Architecture

Demo Videos

Obama

Cumulative control of head pose, lip movements, and eye expressions by different drivers (Fig. 2)

Separate control of head motion, lip synchronization, and eye expressions by a single driver and their combination

GRID

Identity preservation and faithful transfer of head pose and facial expressions from a single driver (Fig. 3)

Identity preservation and faithful transfer of head pose and facial expressions from multiple drivers

KoEBA

Identity preservation and faithful transfer of head motion and facial expressions from a single driver (Fig. 4)

Identity preservation and faithful transfer of head motion and facial expressions from multiple drivers

Note: The Korean election broadcast addresses dataset (KoEBA) is publicly available at https://github.com/deepbrainai-research/koeba.

Demo Code

Acknowledgements

This work was supported by the Institute of Information & Communications Technology Planning & Evaluation (IITP) grant funded by the Ministry of Science and ICT (MSIT) of South Korea (No. 2021-0-00888).

Citation

@misc{hwang2023discohead,

title={DisCoHead: Audio-and-Video-Driven Talking Head Generation by Disentangled Control of Head Pose and Facial Expressions},

author={Geumbyeol Hwang and Sunwon Hong and Seunghyun Lee and Sungwoo Park and Gyeongsu Chae},

year={2023},

eprint={2303.07697},

archivePrefix={arXiv},

primaryClass={cs.CV}

}